![SOLVED: (30 pts) Consider the Ridge regression with argmin (yi 1i8)2 + AllBIIZ; 1=1 where %i [2{4) , ,#()] (10 pts) Show that a closed form expression for the ridge estimator is SOLVED: (30 pts) Consider the Ridge regression with argmin (yi 1i8)2 + AllBIIZ; 1=1 where %i [2{4) , ,#()] (10 pts) Show that a closed form expression for the ridge estimator is](https://cdn.numerade.com/ask_images/27880125018a46de98fe2ba9dabb347d.jpg)

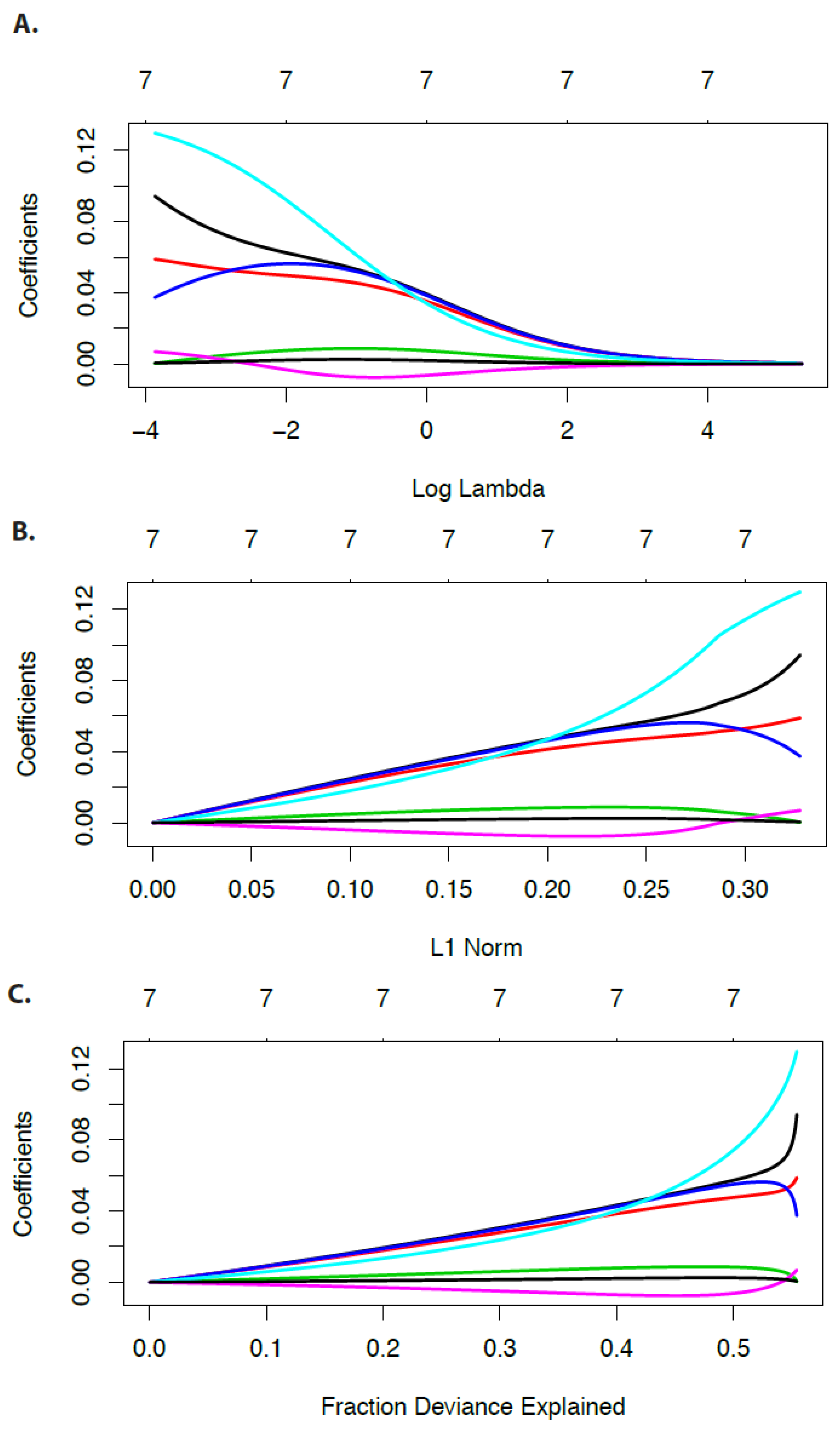

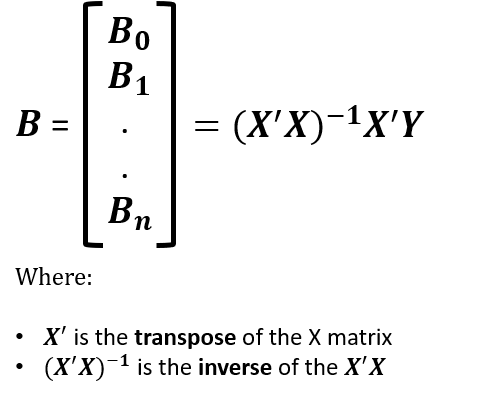

SOLVED: (30 pts) Consider the Ridge regression with argmin (yi 1i8)2 + AllBIIZ; 1=1 where %i [2{4) , ,#()] (10 pts) Show that a closed form expression for the ridge estimator is

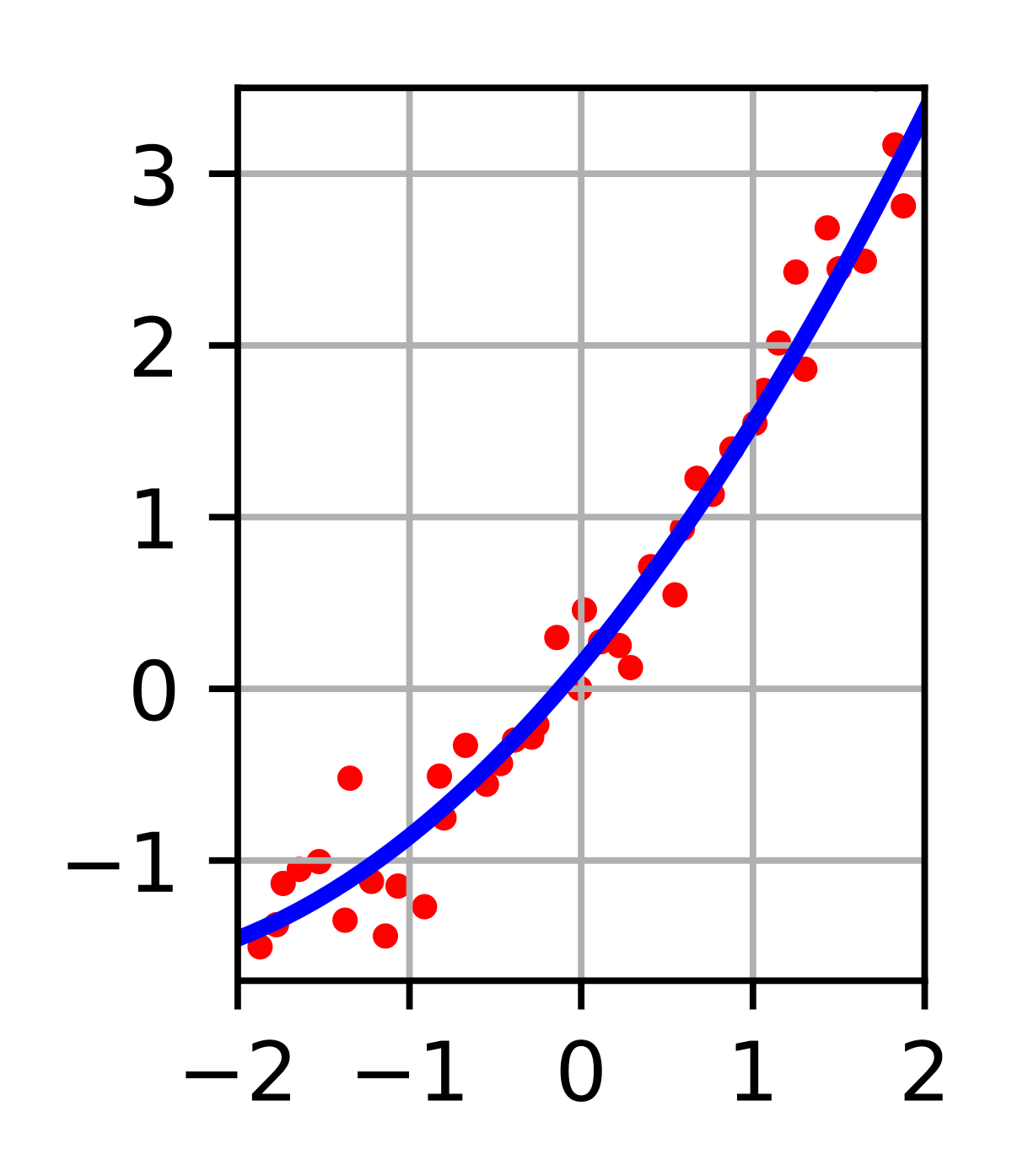

Linear Regression & Norm-based Regularization: From Closed-form Solutions to Non-linear Problems | by Andreas Maier | CodeX | Medium

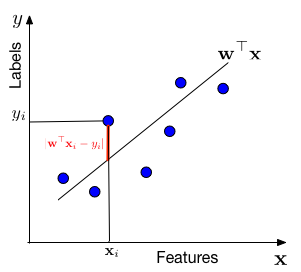

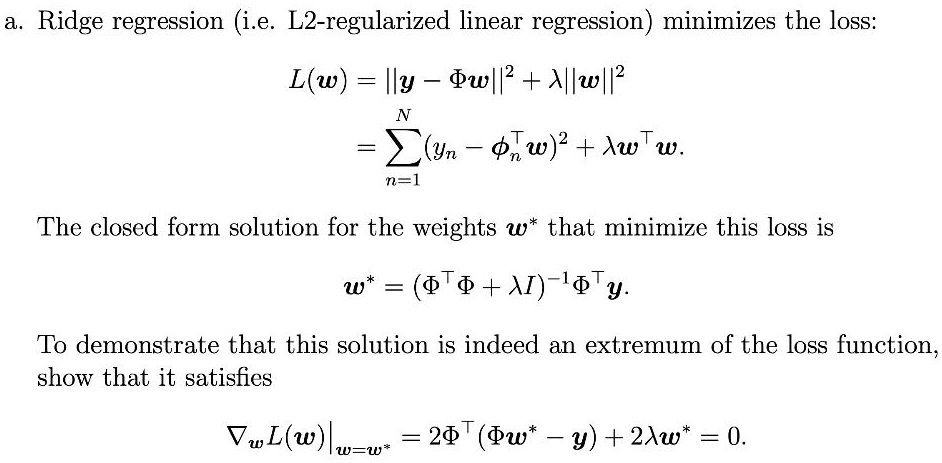

SOLVED: Ridge regression (i.e. L2-regularized linear regression) minimizes the loss: L(w) = Ily pwll? + Allwll? (yn @3w)? + Aw W n=1 The closed form solution for the weights w that minimize

matrices - Derivation of Closed Form solution of Regualrized Linear Regression - Mathematics Stack Exchange

lasso - For ridge regression, show if $K$ columns of $X$ are identical then we must have same corresponding parameters - Cross Validated